All Search engines work by crawlers. They are web crawlers using bots called spiders. These web crawlers efficiently follow links from the webpage to the webpage to find new content to complement the search index. When using a search engine, relevant results are taken out from the index and ranked using an algorithm.

If that sounds weird, it’s because it is. But if you want to rank higher in the SERP and want to get more traffic to your website then you need a basic knowledge of how search engines find, index, and rank content.

Table of Content

- What is a search engine?

- How search engine works?

- How do search engine builds their index?

- How do search engines rank websites or web pages?

Before we get a move to the technical part, let’s first make sure that we understand what search engines are, and why they exist.

What is a search engine?

A search engine is a software system that is designed to search for websites on the internet based on the user’s search queries.

It finds the results in its database, sorts them, and makes a list of those results using its search algorithms. The list on which the result appears is called a search engine results page or SERP.

Even though there are various search engines in the world like Google, Bing, Yahoo, etc., the overall principles of searching and providing results are the same across all of them.

How search engines work?

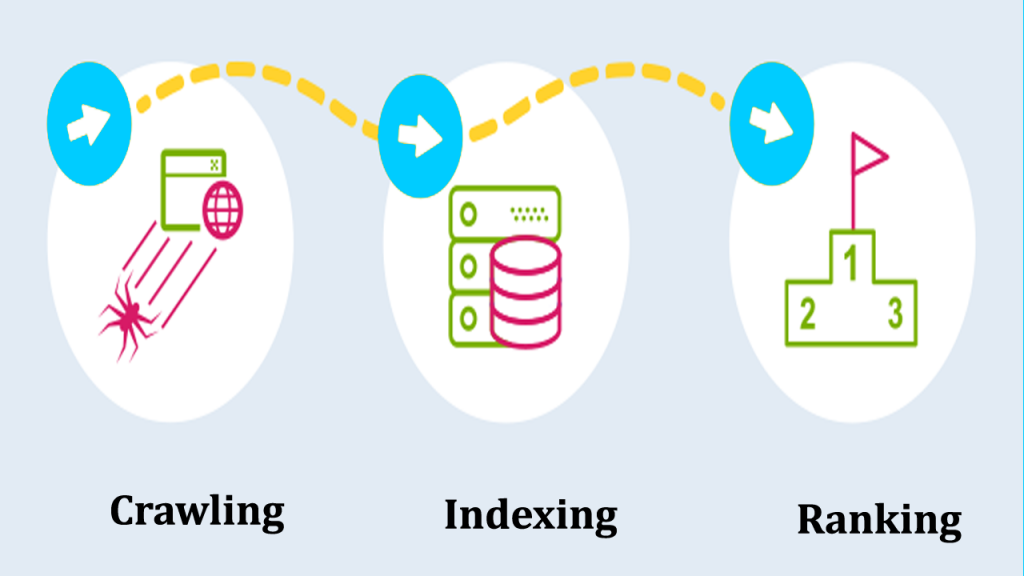

The Search engines can be different from one another in their ways of giving the results to the user but all of them are built on the 3 important ideologies:

1.Crawling

The finding of new web pages on the internet started with the process called crawling.

All Search engines use commands called web crawlers or called bots or spiders, which follow links from previously known pages to the new ones that need to be discovered.

Always a web crawler finds a new webpage or website through a link and scans and passes its content for further processing which is called “Crawling” and continues in the detection of new webpages.

2.Indexing

As soon as the bots crawl the statistics, it’s time for indexing – the system of validating and storing the content material from the webpages inside the search engine’s database called “index”. It is largely a massive library of all of the websites.

Your website needs to be listed to be able to be displayed on another seek engine consequences web page. Understand that each crawling and indexing are non-stop procedures that take region time and again to hold the database sparkling. As soon as the webpage is analyzed and saved inside the index, it could be used as a search result for a capacity seek to question.

3.Ranking

The ultimate step includes picking the exceptional consequences and growing a listing of pages so one can appear on the result web page. every search engine makes use of dozens of ranking indicators and most of them are stored as a secret, unavailable to the general public.

How do search engines build their index?

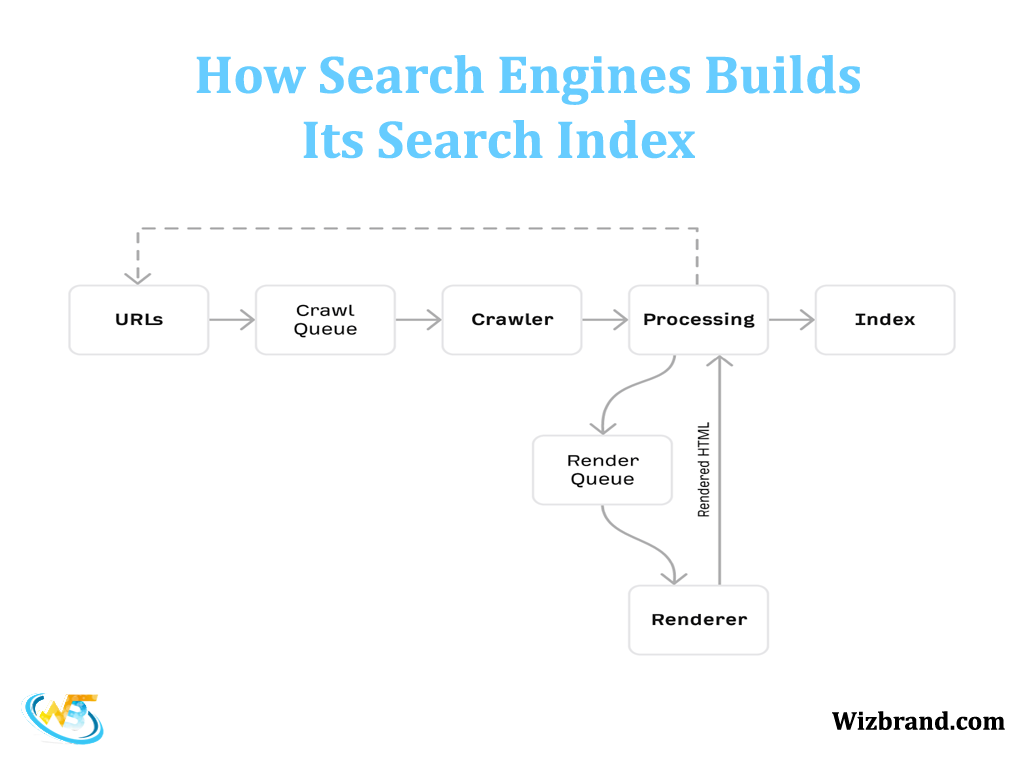

Best recognized search engines like Google and Bing have millions and billions of pages in their search indexes. Before discussing ranking algorithms, let’s go through the mechanisms used to build and maintain a web index.

Here is the simple process, courtesy of search engines:

Now, with the help of the above image I’ll explain you the indexing:

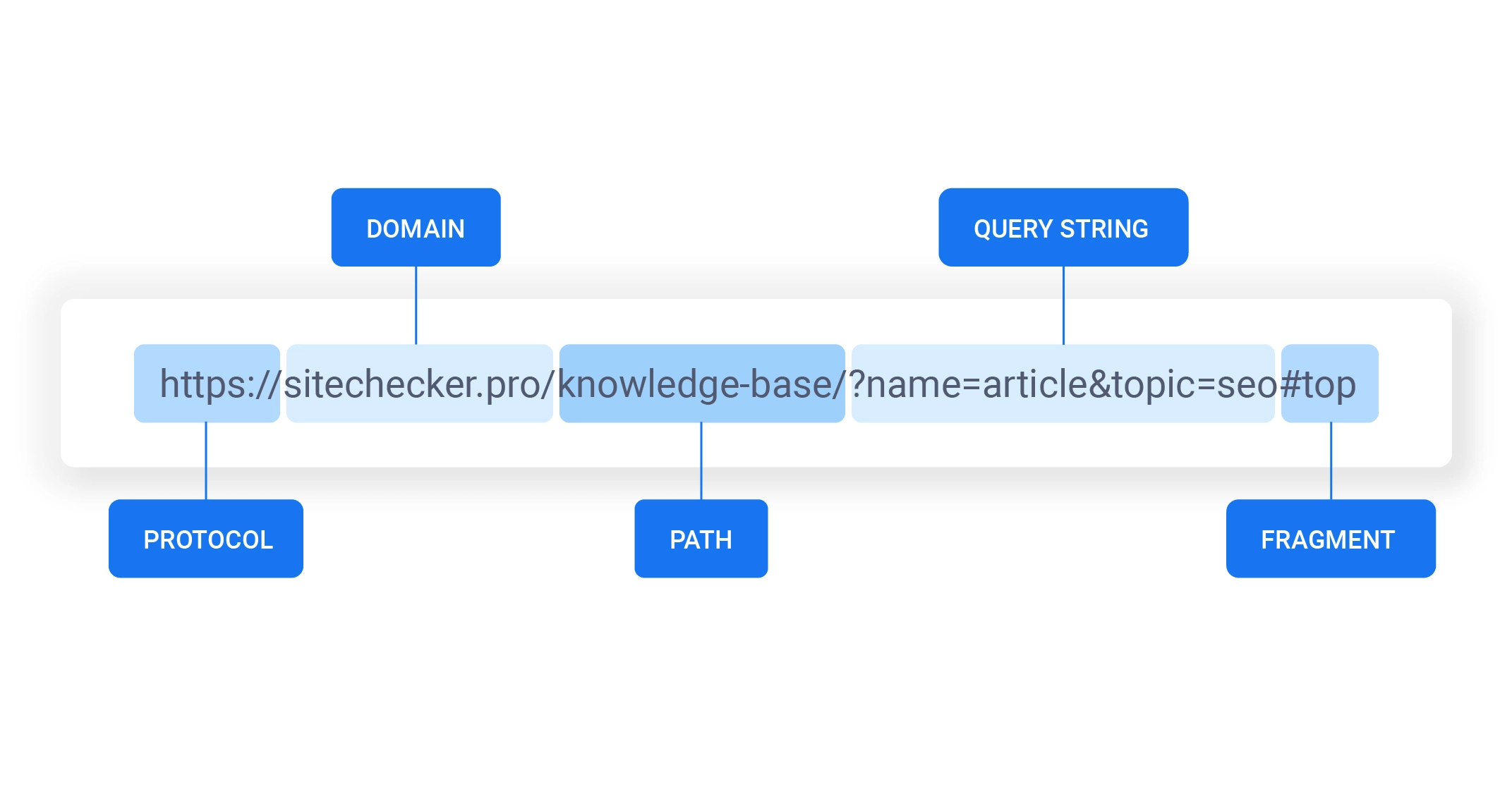

URLs

Everything starts with a regarded list of URLs. Google discovers those via various techniques, however, the three most common ones are:

Backlinks

Search engines already have an index containing trillions of web pages. If someone provides a link to consider one of your pages from one of those internet pages, they can discover it from there.

Sitemaps

Sitemaps find all of the important pages on your website. If you submit your sitemap to search engines, it can assist them to find out your website faster.

URL Submissions

Search engines like Google also allow submissions of individual URLs via Google Search Console.

Crawlers

Crawling is where a computer bot referred to as a spider visits and downloads the found pages.

It’s essential to word that Google doesn’t always crawl pages inside the order they discover them.

Search engines queue URLs for crawling based totally on some elements, which include:

- The PageRank of the URL

- How frequently the URL modifications

- Whether or not or not it’s new

- That is vital as it method that engines like google would possibly crawl and index some of your pages before others. When you have a big internet site, it may take a while for SERPs to move slowly.

This is essential as it way that search engines like google might move slowly and index a number of your pages before others. If you have a huge internet site, it can take a while for engines like google to completely crawl it.

processing with rendering

Processing is in which search engines work to apprehend and extract key statistics from crawled pages. no person out of doors of Google knows every element about this system, however, the critical elements for our information are extracting links and storing content for indexing.

Google has to render pages to completely technique them, that is wherein Google runs the page’s code to recognize how it seems to customers.

That started a few processing takes place before and after rendering—as you can see inside the diagram.

Index

Indexing is where processed facts from crawled pages are delivered to a massive database referred to as the search index. This is essentially a virtual library of trillions of web pages wherein Google’s seek results come from. That’s a vital point. while you type a query right into a search engine, you’re now not at once looking on the internet for matching consequences. You’re searching a seek engine’s index of net pages. If a web web page isn’t inside the seek index, seek engine users’ receiver’s located it. That’s why getting your internet site listed in primary search engines like Google and Bing is so vital.

How do search engines rank websites or web pages?

To rank websites, the search engine uses web crawlers that scan and index pages. Every page gets rated according to the search engine’s opinion of its authority and usefulness to the end-user. Then, using an algorithm with over 210 known factors, the search engine orders them on a search result page.

Therefore, appearing higher in the search result pages for a given search query directly means you’re the most relevant and authoritative result for it according to the search engine.

These search result pages answer specific search queries which are made up of keywords and phrases. search engine’s AI is also able to understand the meaning behind each query – and therefore processes them in concepts rather than just individual words. Natural Language Processing is the name given to an algorithm that operates like how people interpret and process language (NLP).